Deduplicating the Archive

From 2015 to 2017, the PPA refined its core collection by eliminating 3,729 duplicate works. These duplications were the result of our initial file transfer from HathiTrust, a partnership of academic and research institutions offering a collection of millions of titles digitized from libraries around the world. We began our active collaboration with HathiTrust in 2012. Partnering with HathiTrust was a necessary and strategic move on our part, but being tied to their metadata posed several problems.

For instance, who assigns all the bibliographic data attached to a HathiTrust digital monograph? How do they choose between spellings of authors’ names? How do they designate volume numbers? What about specific editions of the same work produced within the same year, but by different publishers? These choices vary within each partner institution’s library, and HathiTrust has over 100 partners, many of which have more than one library within their institution. You can imagine how easily standardization gets lost.

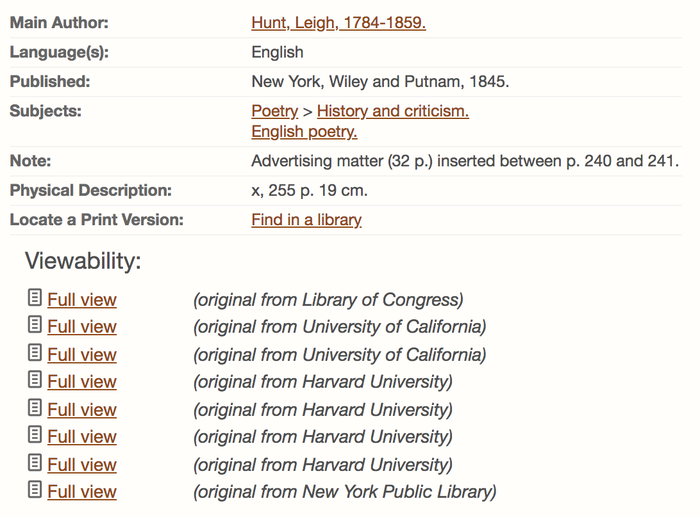

Additionally, partner institutions submit their digital surrogates to HathiTrust, but HathiTrust acts as a platform by which you might discover the actual book in the actual library. That means that if the Library of Congress, the University of California, and Harvard all own the book and sent the digital surrogate to HathiTrust, HathiTrust has all of these surrogates.

Copies of Imagination and Fancy in HathiTrust, digitized by various host libraries

When you partner with them and ask for access to thousands of titles, they’ll give you every copy of that title that they have. Additionally, titles themselves could be miskeyed and author’s names or publication dates could vary between libraries. The lack of standardization across libraries and within HathiTrust, as well as the mechanism by which they delivered multiple copies of the same work, led to the PPA initially hosting hundreds of duplicate copies of the same work [for a more in-depth account of how we chose which works to keep, see Colette Johnson’s essay on Samuel Johnson]. Because duplicates were skewing users’ search results on our beta-site, we knew we would have to clean our initial dataset and tackle this problem head- (or rather hands) on.

Take, for example, Imagination and Fancy, a “who’s who” of poetry written by Leigh Hunt and published in 1845. The 2.0 version of the PPA site was hosting five identical versions of the exact same text by the same publisher printed in the same year. These were scans of the exact same book by four different host libraries: Harvard, the Library of Congress, the New York Public Library, and the University of California. Or so we thought.

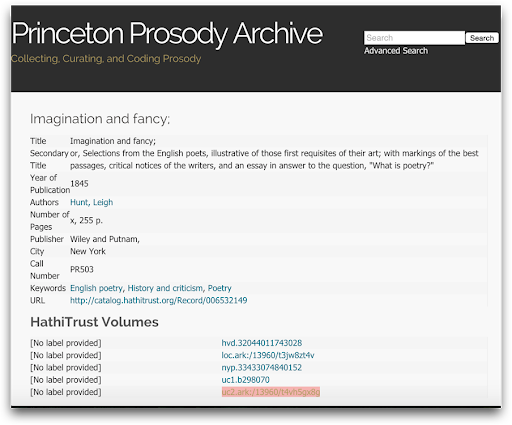

Duplicates in PPA 2.0

Project manager Meagan Wilson did some investigative work, and discovered that one was a dead link. Of the remaining four, Wilson compared the title pages to verify that each copy contained identical information.

Title pages of the four copies of Imagination and Fancy

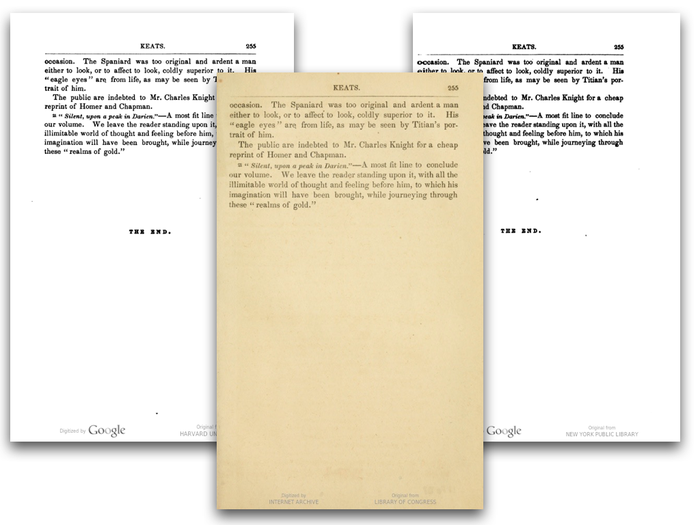

However, Wilson also conducted this comparative exercise with the last page of text in each copy to check for pagination, as well as any added appendices, and she made an interesting discovery. Three copies were paginated the same and nearly identical (one was missing “THE END”).

Nearly identical last pages of three copies of Imagination and Fancy

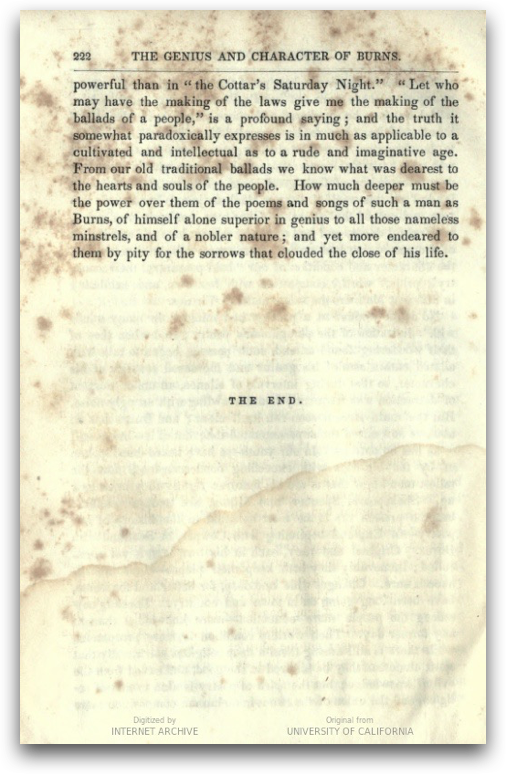

The fourth ended on a completely different essay! The explanation? Wiley and Putnam released a double edition of Leigh Hunt’s text and a 222 page essay “The Genius and Character of Burns.” But this double edition had the exact same metadata, so there’s no way we would have known of this discrepancy had Wilson not instinctively looked past the title page.

Different final page of fourth copy

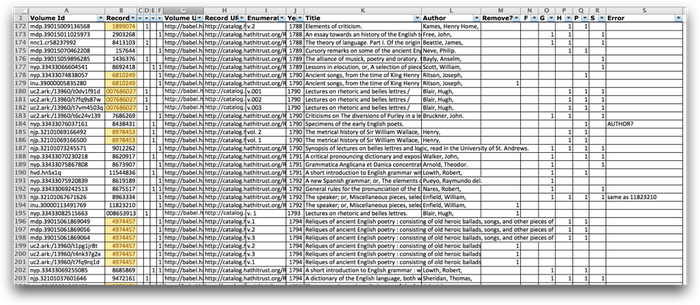

Wilson whittled down the collection this way, virtually by hand! She created a spreadsheet of the metadata, which included what HathiTrust calls “Record IDs”—an identifying string of numbers shared by exact copies of texts or multivolume works. (This is distinct from HathiTrust’s “Volume IDs”; each item in HathiTrust has a unique Volume ID—such as nyp.33433074840152—that identifies the originating host institution—in this example, the New York Public Library.)

Data cleaning

Wilson ran an algorithm to “dedupe” our list of 8,300 files, giving priority to those copies scanned by libraries with which we have a relationship; for example, we wanted to keep Princeton, Columbia, and NYPL holdings—in that order—because all three libraries belong to a consortium called ReCAP. Because HathiTrust also uses Record IDs to group multivolume works, Wilson had to make sure that the script cut exact copies only, and not multi-volume works. She painstakingly hand-checked these records for accuracy and for discrepancies like the Leigh Hunt example. This process refined the PPA’s collection to just under 5,000 works; that number shows just how many duplicates we were hosting—about 40% of the original 8,300 texts that HathiTrust delivered.

With a clean, de-duplicated database, we teamed up with the Center for Digital Humanities at Princeton to create an intuitive user interface with a robust administrative backend. This backend is crucial for managing HathiTrust works and allows PPA team members to edit metadata, add new works, and even group items into filterable collections. In the coming months, the PPA team will be working on making metadata corrections so that the PPA’s users can get the cleanest, most accurate information when they view these items on our site.

Edited by Meredith Martin